Lindemann–Weierstrass theorem

| Part of a series of articles on the |

| mathematical constant π |

|---|

| 3.1415926535897932384626433... |

| Uses |

| Properties |

| Value |

| People |

| History |

| In culture |

| Related topics |

| Part of a series of articles on the |

| mathematical constant e |

|---|

|

| Properties |

| Applications |

| Defining e |

| People |

| Related topics |

In transcendental number theory, the Lindemann–Weierstrass theorem is a result that is very useful in establishing the transcendence of numbers. It states the following:

Lindemann–Weierstrass theorem — if α1, ..., αn are algebraic numbers that are linearly independent over the rational numbers [math]\displaystyle{ \mathbb{Q} }[/math], then eα1, ..., eαn are algebraically independent over [math]\displaystyle{ \mathbb{Q} }[/math].

In other words, the extension field [math]\displaystyle{ \mathbb{Q}(e^{\alpha_1}, \dots, e^{\alpha_n}) }[/math] has transcendence degree n over [math]\displaystyle{ \mathbb{Q} }[/math].

An equivalent formulation (Baker 1990), is the following:

An equivalent formulation — If α1, ..., αn are distinct algebraic numbers, then the exponentials eα1, ..., eαn are linearly independent over the algebraic numbers.

This equivalence transforms a linear relation over the algebraic numbers into an algebraic relation over [math]\displaystyle{ \mathbb{Q} }[/math] by using the fact that a symmetric polynomial whose arguments are all conjugates of one another gives a rational number.

The theorem is named for Ferdinand von Lindemann and Karl Weierstrass. Lindemann proved in 1882 that eα is transcendental for every non-zero algebraic number α, thereby establishing that π is transcendental (see below).[1] Weierstrass proved the above more general statement in 1885.[2]

The theorem, along with the Gelfond–Schneider theorem, is extended by Baker's theorem,[3] and all of these would be further generalized by Schanuel's conjecture.

Naming convention

The theorem is also known variously as the Hermite–Lindemann theorem and the Hermite–Lindemann–Weierstrass theorem. Charles Hermite first proved the simpler theorem where the αi exponents are required to be rational integers and linear independence is only assured over the rational integers,[4][5] a result sometimes referred to as Hermite's theorem.[6] Although that appears to be a special case of the above theorem, the general result can be reduced to this simpler case. Lindemann was the first to allow algebraic numbers into Hermite's work in 1882.[1] Shortly afterwards Weierstrass obtained the full result,[2] and further simplifications have been made by several mathematicians, most notably by David Hilbert[7] and Paul Gordan.[8]

Transcendence of e and π

The transcendence of e and π are direct corollaries of this theorem.

Suppose α is a non-zero algebraic number; then {α} is a linearly independent set over the rationals, and therefore by the first formulation of the theorem {eα} is an algebraically independent set; or in other words eα is transcendental. In particular, e1 = e is transcendental. (A more elementary proof that e is transcendental is outlined in the article on transcendental numbers.)

Alternatively, by the second formulation of the theorem, if α is a non-zero algebraic number, then {0, α} is a set of distinct algebraic numbers, and so the set {e0, eα} = {1, eα} is linearly independent over the algebraic numbers and in particular eα cannot be algebraic and so it is transcendental.

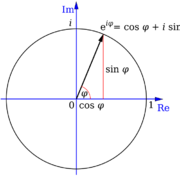

To prove that π is transcendental, we prove that it is not algebraic. If π were algebraic, πi would be algebraic as well, and then by the Lindemann–Weierstrass theorem eπi = −1 (see Euler's identity) would be transcendental, a contradiction. Therefore π is not algebraic, which means that it is transcendental.

A slight variant on the same proof will show that if α is a non-zero algebraic number then sin(α), cos(α), tan(α) and their hyperbolic counterparts are also transcendental.

p-adic conjecture

p-adic Lindemann–Weierstrass Conjecture. — Suppose p is some prime number and α1, ..., αn are p-adic numbers which are algebraic and linearly independent over [math]\displaystyle{ \mathbb{Q} }[/math], such that | αi |p < 1/p for all i; then the p-adic exponentials expp(α1), . . . , expp(αn) are p-adic numbers that are algebraically independent over [math]\displaystyle{ \mathbb{Q} }[/math].

Modular conjecture

An analogue of the theorem involving the modular function j was conjectured by Daniel Bertrand in 1997, and remains an open problem.[9] Writing q = e2πiτ for the square of the nome and j(τ) = J(q), the conjecture is as follows.

Modular conjecture — Let q1, ..., qn be non-zero algebraic numbers in the complex unit disc such that the 3n numbers

- [math]\displaystyle{ \left \{ J(q_1), J'(q_1), J''(q_1), \ldots, J(q_n), J'(q_n), J''(q_n) \right \} }[/math]

are algebraically dependent over [math]\displaystyle{ \mathbb{Q} }[/math]. Then there exist two indices 1 ≤ i < j ≤ n such that qi and qj are multiplicatively dependent.

Lindemann–Weierstrass theorem

Lindemann–Weierstrass Theorem (Baker's reformulation). — If a1, ..., an are algebraic numbers, and α1, ..., αn are distinct algebraic numbers, then[10]

- [math]\displaystyle{ a_1 e^{\alpha_1} + a_2 e^{\alpha_2} + \cdots + a_n e^{\alpha_n} = 0 }[/math]

has only the trivial solution [math]\displaystyle{ a_i = 0 }[/math] for all [math]\displaystyle{ i = 1, \dots, n. }[/math]

Proof

The proof relies on two preliminary lemmas. Notice that Lemma B itself is already sufficient to deduce the original statement of Lindemann–Weierstrass theorem.

Preliminary lemmas

Lemma A. — Let c(1), ..., c(r) be integers and, for every k between 1 and r, let {γ(k)1, ..., γ(k)m(k)} be the roots of a non-zero polynomial with integer coefficients [math]\displaystyle{ T_k(x) }[/math]. If γ(k)i ≠ γ(u)v whenever (k, i) ≠ (u, v), then

- [math]\displaystyle{ c(1)\left (e^{\gamma(1)_1}+\cdots+ e^{\gamma(1)_{m(1)}} \right ) + \cdots + c(r) \left (e^{\gamma(r)_1}+\cdots+ e^{\gamma(r)_{m(r)}} \right) = 0 }[/math]

has only the trivial solution [math]\displaystyle{ c(i)=0 }[/math] for all [math]\displaystyle{ i = 1, \dots, r. }[/math]

Proof of Lemma A. To simplify the notation set:

- [math]\displaystyle{ \begin{align} & n_0 =0, & & \\ & n_i =\sum\nolimits_{k=1}^i m(k), & & i=1,\ldots,r \\ & n=n_r, & & \\ & \alpha_{n_{i-1}+j} =\gamma(i)_j, & & 1\leq i\leq r,\ 1\leq j\leq m(i) \\ & \beta_{n_{i-1}+j} =c(i). \end{align} }[/math]

Then the statement becomes

- [math]\displaystyle{ \sum_{k=1}^n \beta_k e^{\alpha_k}\neq 0. }[/math]

Let p be a prime number and define the following polynomials:

- [math]\displaystyle{ f_i(x) = \frac {\ell^{np} (x-\alpha_1)^p \cdots (x-\alpha_n)^p}{(x-\alpha_i)}, }[/math]

where ℓ is a non-zero integer such that [math]\displaystyle{ \ell\alpha_1,\ldots,\ell\alpha_n }[/math] are all algebraic integers. Define[11]

- [math]\displaystyle{ I_i(s) = \int^s_0 e^{s-x} f_i(x) \, dx. }[/math]

Using integration by parts we arrive at

- [math]\displaystyle{ I_i(s) = e^s \sum_{j=0}^{np-1} f_i^{(j)}(0) - \sum_{j=0}^{np-1} f_i^{(j)}(s), }[/math]

where [math]\displaystyle{ np-1 }[/math] is the degree of [math]\displaystyle{ f_i }[/math], and [math]\displaystyle{ f_i^{(j)} }[/math] is the j-th derivative of [math]\displaystyle{ f_i }[/math]. This also holds for s complex (in this case the integral has to be intended as a contour integral, for example along the straight segment from 0 to s) because

- [math]\displaystyle{ -e^{s-x} \sum_{j=0}^{np-1} f_i^{(j)}(x) }[/math]

is a primitive of [math]\displaystyle{ e^{s-x} f_i(x) }[/math].

Consider the following sum:

- [math]\displaystyle{ \begin{align} J_i &=\sum_{k=1}^n\beta_k I_i(\alpha_k)\\[5pt] &= \sum_{k=1}^n\beta_k \left ( e^{\alpha_k} \sum_{j=0}^{np-1} f_i^{(j)}(0) - \sum_{j=0}^{np-1} f_i^{(j)}(\alpha_k)\right ) \\[5pt] &=\left(\sum_{j=0}^{np-1}f_i^{(j)}(0)\right)\left(\sum_{k=1}^n \beta_k e^{\alpha_k}\right)-\sum_{k=1}^n\sum_{j=0}^{np-1} \beta_kf_i^{(j)}(\alpha_k)\\[5pt] &= -\sum_{k=1}^n \sum_{j=0}^{np-1} \beta_kf_i^{(j)}(\alpha_k) \end{align} }[/math]

In the last line we assumed that the conclusion of the Lemma is false. In order to complete the proof we need to reach a contradiction. We will do so by estimating [math]\displaystyle{ |J_1\cdots J_n| }[/math] in two different ways.

First [math]\displaystyle{ f_i^{(j)}(\alpha_k) }[/math] is an algebraic integer which is divisible by p! for [math]\displaystyle{ j\geq p }[/math] and vanishes for [math]\displaystyle{ j\lt p }[/math] unless [math]\displaystyle{ j=p-1 }[/math] and [math]\displaystyle{ k=i }[/math], in which case it equals

- [math]\displaystyle{ \ell^{np}(p-1)!\prod_{k\neq i}(\alpha_i-\alpha_k)^p. }[/math]

This is not divisible by p when p is large enough because otherwise, putting

- [math]\displaystyle{ \delta_i=\prod_{k\neq i}(\ell\alpha_i-\ell\alpha_k) }[/math]

(which is a non-zero algebraic integer) and calling [math]\displaystyle{ d_i\in\mathbb Z }[/math] the product of its conjugates (which is still non-zero), we would get that p divides [math]\displaystyle{ \ell^p(p-1)!d_i^p }[/math], which is false.

So [math]\displaystyle{ J_i }[/math] is a non-zero algebraic integer divisible by (p − 1)!. Now

- [math]\displaystyle{ J_i=-\sum_{j=0}^{np-1}\sum_{t=1}^r c(t)\left(f_i^{(j)}(\alpha_{n_{t-1}+1}) + \cdots + f_i^{(j)}(\alpha_{n_t})\right). }[/math]

Since each [math]\displaystyle{ f_i(x) }[/math] is obtained by dividing a fixed polynomial with integer coefficients by [math]\displaystyle{ (x-\alpha_i) }[/math], it is of the form

- [math]\displaystyle{ f_i(x)=\sum_{m=0}^{np-1}g_m(\alpha_i)x^m, }[/math]

where [math]\displaystyle{ g_m }[/math] is a polynomial (with integer coefficients) independent of i. The same holds for the derivatives [math]\displaystyle{ f_i^{(j)}(x) }[/math].

Hence, by the fundamental theorem of symmetric polynomials,

- [math]\displaystyle{ f_i^{(j)}(\alpha_{n_{t-1}+1})+\cdots+f_i^{(j)}(\alpha_{n_t}) }[/math]

is a fixed polynomial with rational coefficients evaluated in [math]\displaystyle{ \alpha_i }[/math] (this is seen by grouping the same powers of [math]\displaystyle{ \alpha_{n_{t-1}+1},\dots,\alpha_{n_t} }[/math] appearing in the expansion and using the fact that these algebraic numbers are a complete set of conjugates). So the same is true of [math]\displaystyle{ J_i }[/math], i.e. it equals [math]\displaystyle{ G(\alpha_i) }[/math], where G is a polynomial with rational coefficients independent of i.

Finally [math]\displaystyle{ J_1\cdots J_n=G(\alpha_1)\cdots G(\alpha_n) }[/math] is rational (again by the fundamental theorem of symmetric polynomials) and is a non-zero algebraic integer divisible by [math]\displaystyle{ (p-1)!^n }[/math] (since the [math]\displaystyle{ J_i }[/math]'s are algebraic integers divisible by [math]\displaystyle{ (p-1)! }[/math]). Therefore

- [math]\displaystyle{ |J_1\cdots J_n|\geq (p-1)!^n. }[/math]

However one clearly has:

- [math]\displaystyle{ |I_i(\alpha_k)| \leq |\alpha_k|e^{|\alpha_k|}F_i(|\alpha_k|), }[/math]

where Fi is the polynomial whose coefficients are the absolute values of those of fi (this follows directly from the definition of [math]\displaystyle{ I_i(s) }[/math]). Thus

- [math]\displaystyle{ |J_i|\leq \sum_{k=1}^n \left |\beta_k\alpha_k \right |e^{|\alpha_k|}F_i \left ( \left |\alpha_k \right| \right ) }[/math]

and so by the construction of the [math]\displaystyle{ f_i }[/math]'s we have [math]\displaystyle{ |J_1\cdots J_n|\le C^p }[/math] for a sufficiently large C independent of p, which contradicts the previous inequality. This proves Lemma A. ∎

Lemma B. — If b(1), ..., b(n) are integers and γ(1), ..., γ(n), are distinct algebraic numbers, then

- [math]\displaystyle{ b(1)e^{\gamma(1)}+\cdots+ b(n)e^{\gamma(n)} = 0 }[/math]

has only the trivial solution [math]\displaystyle{ b(i)=0 }[/math] for all [math]\displaystyle{ i = 1, \dots, n. }[/math]

Proof of Lemma B: Assuming

- [math]\displaystyle{ b(1)e^{\gamma(1)}+\cdots+ b(n)e^{\gamma(n)}= 0, }[/math]

we will derive a contradiction, thus proving Lemma B.

Let us choose a polynomial with integer coefficients which vanishes on all the [math]\displaystyle{ \gamma(k) }[/math]'s and let [math]\displaystyle{ \gamma(1),\ldots,\gamma(n),\gamma(n+1),\ldots,\gamma(N) }[/math] be all its distinct roots. Let b(n + 1) = ... = b(N) = 0.

The polynomial

- [math]\displaystyle{ P(x_1,\dots,x_N)=\prod_{\sigma\in S_N}(b(1) x_{\sigma(1)}+\cdots+b(N) x_{\sigma(N)}) }[/math]

vanishes at [math]\displaystyle{ (e^{\gamma(1)},\dots,e^{\gamma(N)}) }[/math] by assumption. Since the product is symmetric, for any [math]\displaystyle{ \tau\in S_N }[/math] the monomials [math]\displaystyle{ x_{\tau(1)}^{h_1}\cdots x_{\tau(N)}^{h_N} }[/math] and [math]\displaystyle{ x_1^{h_1}\cdots x_N^{h_N} }[/math] have the same coefficient in the expansion of P.

Thus, expanding [math]\displaystyle{ P(e^{\gamma(1)},\dots,e^{\gamma(N)}) }[/math] accordingly and grouping the terms with the same exponent, we see that the resulting exponents [math]\displaystyle{ h_1\gamma(1)+\dots+h_N\gamma(N) }[/math] form a complete set of conjugates and, if two terms have conjugate exponents, they are multiplied by the same coefficient.

So we are in the situation of Lemma A. To reach a contradiction it suffices to see that at least one of the coefficients is non-zero. This is seen by equipping C with the lexicographic order and by choosing for each factor in the product the term with non-zero coefficient which has maximum exponent according to this ordering: the product of these terms has non-zero coefficient in the expansion and does not get simplified by any other term. This proves Lemma B. ∎

Final step

We turn now to prove the theorem: Let a(1), ..., a(n) be non-zero algebraic numbers, and α(1), ..., α(n) distinct algebraic numbers. Then let us assume that:

- [math]\displaystyle{ a(1)e^{\alpha(1)}+\cdots + a(n)e^{\alpha(n)} = 0. }[/math]

We will show that this leads to contradiction and thus prove the theorem. The proof is very similar to that of Lemma B, except that this time the choices are made over the a(i)'s:

For every i ∈ {1, ..., n}, a(i) is algebraic, so it is a root of an irreducible polynomial with integer coefficients of degree d(i). Let us denote the distinct roots of this polynomial a(i)1, ..., a(i)d(i), with a(i)1 = a(i).

Let S be the functions σ which choose one element from each of the sequences (1, ..., d(1)), (1, ..., d(2)), ..., (1, ..., d(n)), so that for every 1 ≤ i ≤ n, σ(i) is an integer between 1 and d(i). We form the polynomial in the variables [math]\displaystyle{ x_{11},\dots,x_{1d(1)},\dots,x_{n1},\dots,x_{nd(n)},y_1,\dots,y_n }[/math]

- [math]\displaystyle{ Q(x_{11},\dots,x_{nd(n)},y_1,\dots,y_n)=\prod\nolimits_{\sigma\in S}\left(x_{1\sigma(1)}y_1+\dots+x_{n\sigma(n)}y_n\right). }[/math]

Since the product is over all the possible choice functions σ, Q is symmetric in [math]\displaystyle{ x_{i1},\dots,x_{id(i)} }[/math] for every i. Therefore Q is a polynomial with integer coefficients in elementary symmetric polynomials of the above variables, for every i, and in the variables yi. Each of the latter symmetric polynomials is a rational number when evaluated in [math]\displaystyle{ a(i)_1,\dots,a(i)_{d(i)} }[/math].

The evaluated polynomial [math]\displaystyle{ Q(a(1)_1,\dots,a(n)_{d(n)},e^{\alpha(1)},\dots,e^{\alpha(n)}) }[/math] vanishes because one of the choices is just σ(i) = 1 for all i, for which the corresponding factor vanishes according to our assumption above. Thus, the evaluated polynomial is a sum of the form

- [math]\displaystyle{ b(1)e^{\beta(1)}+ b(2)e^{\beta(2)}+ \cdots + b(N)e^{\beta(N)}= 0, }[/math]

where we already grouped the terms with the same exponent. So in the left-hand side we have distinct values β(1), ..., β(N), each of which is still algebraic (being a sum of algebraic numbers) and coefficients [math]\displaystyle{ b(1),\dots,b(N)\in\mathbb Q }[/math]. The sum is nontrivial: if [math]\displaystyle{ \alpha(i) }[/math] is maximal in the lexicographic order, the coefficient of [math]\displaystyle{ e^{|S|\alpha(i)} }[/math] is just a product of a(i)j's (with possible repetitions), which is non-zero.

By multiplying the equation with an appropriate integer factor, we get an identical equation except that now b(1), ..., b(N) are all integers. Therefore, according to Lemma B, the equality cannot hold, and we are led to a contradiction which completes the proof. ∎

Note that Lemma A is sufficient to prove that e is irrational, since otherwise we may write e = p / q, where both p and q are non-zero integers, but by Lemma A we would have qe − p ≠ 0, which is a contradiction. Lemma A also suffices to prove that π is irrational, since otherwise we may write π = k / n, where both k and n are integers) and then ±iπ are the roots of n2x2 + k2 = 0; thus 2 − 1 − 1 = 2e0 + eiπ + e−iπ ≠ 0; but this is false.

Similarly, Lemma B is sufficient to prove that e is transcendental, since Lemma B says that if a0, ..., an are integers not all of which are zero, then

- [math]\displaystyle{ a_ne^n+\cdots+a_0e^0\ne 0. }[/math]

Lemma B also suffices to prove that π is transcendental, since otherwise we would have 1 + eiπ ≠ 0.

Equivalence of the two statements

Baker's formulation of the theorem clearly implies the first formulation. Indeed, if [math]\displaystyle{ \alpha(1),\ldots,\alpha(n) }[/math] are algebraic numbers that are linearly independent over [math]\displaystyle{ \Q }[/math], and

- [math]\displaystyle{ P(x_1, \ldots, x_n)= \sum b_{i_1,\ldots, i_n} x_1^{i_1}\cdots x_n^{i_n} }[/math]

is a polynomial with rational coefficients, then we have

- [math]\displaystyle{ P\left(e^{\alpha(1)},\dots,e^{\alpha(n)}\right)= \sum b_{i_1,\dots,i_n} e^{i_1 \alpha(1) + \cdots + i_n \alpha(n)}, }[/math]

and since [math]\displaystyle{ \alpha(1),\ldots,\alpha(n) }[/math] are algebraic numbers which are linearly independent over the rationals, the numbers [math]\displaystyle{ i_1 \alpha(1) + \cdots + i_n \alpha(n) }[/math] are algebraic and they are distinct for distinct n-tuples [math]\displaystyle{ (i_1,\dots,i_n) }[/math]. So from Baker's formulation of the theorem we get [math]\displaystyle{ b_{i_1,\ldots,i_n}=0 }[/math] for all n-tuples [math]\displaystyle{ (i_1,\dots,i_n) }[/math].

Now assume that the first formulation of the theorem holds. For [math]\displaystyle{ n=1 }[/math] Baker's formulation is trivial, so let us assume that [math]\displaystyle{ n\gt 1 }[/math], and let [math]\displaystyle{ a(1),\ldots,a(n) }[/math] be non-zero algebraic numbers, and [math]\displaystyle{ \alpha(1),\ldots,\alpha(n) }[/math] distinct algebraic numbers such that:

- [math]\displaystyle{ a(1)e^{\alpha(1)} + \cdots + a(n)e^{\alpha(n)} = 0. }[/math]

As seen in the previous section, and with the same notation used there, the value of the polynomial

- [math]\displaystyle{ Q(x_{11},\ldots,x_{nd(n)},y_1,\dots,y_n)=\prod\nolimits_{\sigma\in S}\left(x_{1\sigma(1)}y_1+\dots+x_{n\sigma(n)}y_n\right), }[/math]

at

- [math]\displaystyle{ \left (a(1)_1,\ldots,a(n)_{d(n)},e^{\alpha(1)},\ldots,e^{\alpha(n)} \right) }[/math]

has an expression of the form

- [math]\displaystyle{ b(1)e^{\beta(1)}+ b(2)e^{\beta(2)}+ \cdots + b(M)e^{\beta(M)}= 0, }[/math]

where we have grouped the exponentials having the same exponent. Here, as proved above, [math]\displaystyle{ b(1),\ldots, b(M) }[/math] are rational numbers, not all equal to zero, and each exponent [math]\displaystyle{ \beta(m) }[/math] is a linear combination of [math]\displaystyle{ \alpha(i) }[/math] with integer coefficients. Then, since [math]\displaystyle{ n\gt 1 }[/math] and [math]\displaystyle{ \alpha(1),\ldots,\alpha(n) }[/math] are pairwise distinct, the [math]\displaystyle{ \Q }[/math]-vector subspace [math]\displaystyle{ V }[/math] of [math]\displaystyle{ \C }[/math] generated by [math]\displaystyle{ \alpha(1),\ldots,\alpha(n) }[/math] is not trivial and we can pick [math]\displaystyle{ \alpha(i_1),\ldots,\alpha(i_k) }[/math] to form a basis for [math]\displaystyle{ V. }[/math] For each [math]\displaystyle{ m=1,\dots,M }[/math], we have

- [math]\displaystyle{ \begin{align} \beta(m) = q_{m,1} \alpha(i_1) + \cdots + q_{m,k} \alpha(i_k), && q_{m,j} = \frac{c_{m,j}}{d_{m,j}}; \qquad c_{m,j}, d_{m,j} \in \Z. \end{align} }[/math]

For each [math]\displaystyle{ j=1,\ldots, k, }[/math] let [math]\displaystyle{ d_j }[/math] be the least common multiple of all the [math]\displaystyle{ d_{m,j} }[/math] for [math]\displaystyle{ m=1,\ldots, M }[/math], and put [math]\displaystyle{ v_j = \tfrac{1}{d_j} \alpha(i_j) }[/math]. Then [math]\displaystyle{ v_1,\ldots,v_k }[/math] are algebraic numbers, they form a basis of [math]\displaystyle{ V }[/math], and each [math]\displaystyle{ \beta(m) }[/math] is a linear combination of the [math]\displaystyle{ v_j }[/math] with integer coefficients. By multiplying the relation

- [math]\displaystyle{ b(1)e^{\beta(1)}+ b(2)e^{\beta(2)}+ \cdots + b(M)e^{\beta(M)}= 0, }[/math]

by [math]\displaystyle{ e^{N(v_1+ \cdots + v_k)} }[/math], where [math]\displaystyle{ N }[/math] is a large enough positive integer, we get a non-trivial algebraic relation with rational coefficients connecting [math]\displaystyle{ e^{v_1},\cdots,e^{v_k} }[/math], against the first formulation of the theorem.

Related result

A variant of Lindemann–Weierstrass theorem in which the algebraic numbers are replaced by the transcendental Liouville numbers (or in general, the U numbers) is also known.[12]

See also

- Gelfond–Schneider theorem

- Baker's theorem; an extension of Gelfond–Schneider theorem

- Schanuel's conjecture; if proven, it would imply both the Gelfond–Schneider theorem and the Lindemann–Weierstrass theorem

Notes

- ↑ 1.0 1.1 Lindemann 1882a, Lindemann 1882b.

- ↑ 2.0 2.1 Weierstrass 1885, pp. 1067–1086,

- ↑ (Murty Rath)

- ↑ Hermite 1873, pp. 18–24.

- ↑ Hermite 1874

- ↑ Gelfond 2015.

- ↑ Hilbert 1893, pp. 216–219.

- ↑ Gordan 1893, pp. 222–224.

- ↑ Bertrand 1997, pp. 339–350.

- ↑ (in French) french Proof's Lindemann-Weierstrass (pdf)

- ↑ Up to a factor, this is the same integral appearing in the proof that e is a transcendental number, where β1 = 1, ..., βm = m. The rest of the proof of the Lemma is analog to that proof.

- ↑ Chalebgwa, Prince Taboka; Morris, Sidney A. (2022). "Sin, Cos, Exp, and Log of Liouville Numbers". arXiv:2202.11293v1 [math.NT].

References

- Gordan, P. (1893), "Transcendenz von e und π.", Mathematische Annalen 43 (2–3): 222–224, doi:10.1007/bf01443647, https://gdz.sub.uni-goettingen.de/dms/load/img/?PID=GDZPPN002254557&physid=PHYS_0223

- Hermite, C. (1873), "Sur la fonction exponentielle.", Comptes rendus de l'Académie des Sciences de Paris 77: 18–24, http://gallica.bnf.fr/ark:/12148/bpt6k3034n/f18.image

- Hermite, C. (1874), Sur la fonction exponentielle., Paris: Gauthier-Villars, https://archive.org/details/surlafonctionexp00hermuoft

- Hilbert, D. (1893), "Ueber die Transcendenz der Zahlen e und π.", Mathematische Annalen 43 (2–3): 216–219, doi:10.1007/bf01443645, https://gdz.sub.uni-goettingen.de/index.php?id=11&PID=GDZPPN002254565, retrieved 2018-12-24

- Lindemann, F. (1882), "Über die Ludolph'sche Zahl.", Sitzungsberichte der Königlich Preussischen Akademie der Wissenschaften zu Berlin 2: 679–682, https://archive.org/details/sitzungsberichte1882deutsch/page/679

- Lindemann, F. (1882), "Über die Zahl π.", Mathematische Annalen 20: 213–225, doi:10.1007/bf01446522, https://gdz.sub.uni-goettingen.de/id/PPN235181684_0020?tify=%7B%22view%22:%22info%22,%22pages%22:%5B227%5D%7D, retrieved 2018-12-24

- Murty, M. Ram; Rath, Purusottam (2014). "Baker's Theorem". Transcendental Numbers. pp. 95–100. doi:10.1007/978-1-4939-0832-5_19. ISBN 978-1-4939-0831-8. https://books.google.com/books?id=-4jkAwAAQBAJ&pg=PA95.

- Weierstrass, K. (1885), "Zu Lindemann's Abhandlung. "Über die Ludolph'sche Zahl".", Sitzungsberichte der Königlich Preussischen Akademie der Wissen-schaften zu Berlin 5: 1067–1085, https://books.google.com/books?id=jhlEAQAAMAAJ&pg=PA1067

Further reading

- Baker, Alan (1990), Transcendental number theory, Cambridge Mathematical Library (2nd ed.), Cambridge University Press, ISBN 978-0-521-39791-9, https://books.google.com/books?id=SmsCqiQMvvgC

- Bertrand, D. (1997), "Theta functions and transcendence", The Ramanujan Journal 1 (4): 339–350, doi:10.1023/A:1009749608672

- Gelfond, A.O. (2015), Transcendental and Algebraic Numbers, Dover Books on Mathematics, New York: Dover Publications, ISBN 978-0-486-49526-2, https://books.google.com/books?id=408wBgAAQBAJ

- Jacobson, Nathan (2009), Basic Algebra, I (2nd ed.), Dover Publications, ISBN 978-0-486-47189-1, https://books.google.com/books?id=JHFpv0tKiBAC&printsec

External links

- Weisstein, Eric W.. "Hermite-Lindemann Theorem". http://mathworld.wolfram.com/Hermite-LindemannTheorem.html.

- Weisstein, Eric W.. "Lindemann-Weierstrass Theorem". http://mathworld.wolfram.com/Lindemann-WeierstrassTheorem.html.

|